AdaBoost - Adaptive Boosting

Published on: April 25, 2021

Table of Content

AdaBoost, short for Adaptive Boosting, of Freund and Schapire, was the first practical boosting algorithm and remains one of the most widely used and studied ones even today. Boosting is a general strategy for learning "strong models" by combining multiple simpler ones (weak models or weak learners).

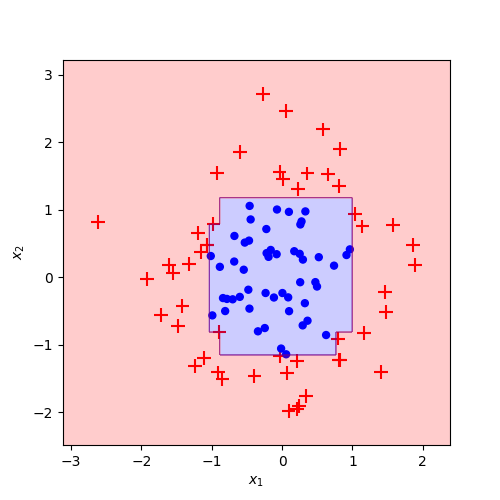

A "weak learner" is a model that will do at least slightly better than chance. AdaBoost can be applied to any classification algorithm, but most often, it's used with Decision Stumps - Decision Trees with only one node and two leaves.

Decision Stumps alone are not an excellent way to make predictions. A full-grown decision tree combines the decisions from all features to predict the target value. A stump, on the other hand, can only use one feature to make predictions.

How does the AdaBoost algorithm work?

- Initialize sample weights uniformly as

. - For each iteration

:

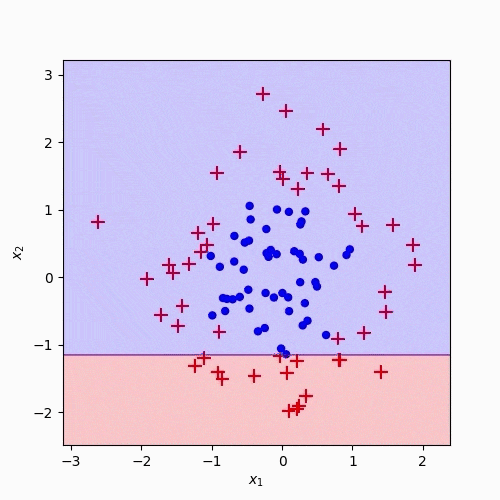

Step 1: A weak learner (e.g. a decision stump) is trained on top of the weighted training data

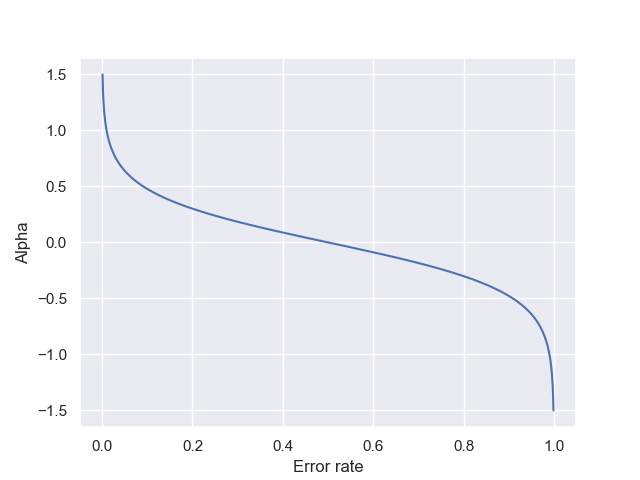

Step 2: After training, the weak learner gets a weight based on its accuracy

Step 3: The weights of misclassified samples are updated

Step 4: Renormalize weights so they sum up to 1

- Make predicts using a linear combination of the weak learners

Code

Resources

- https://scikit-learn.org/stable/modules/ensemble.html#adaboost

- https://www.youtube.com/watch?v=LsK-xG1cLYA

- https://blog.paperspace.com/adaboost-optimizer/

- https://en.wikipedia.org/wiki/AdaBoost

- https://geoffruddock.com/adaboost-from-scratch-in-python/

- https://www.cs.toronto.edu/~mbrubake/teaching/C11/Handouts/AdaBoost.pdf

- https://jeremykun.com/2015/05/18/boosting-census/

- https://ml-explained.com/blog/decision-tree-explained